onelearn.AMFClassifier¶

-

class

onelearn.AMFClassifier(n_classes, n_estimators=10, step=1.0, loss='log', use_aggregation=True, dirichlet=None, split_pure=False, n_jobs=1, n_samples_increment=1024, random_state=None, verbose=False)[source]¶ Aggregated Mondrian Forest classifier for online learning. This algorithm is truly online, in the sense that a single pass is performed, and that predictions can be produced anytime.

Each node in a tree predicts according to the distribution of the labels it contains. This distribution is regularized using a “Jeffreys” prior with parameter

dirichlet. For each class with count labels in the node and n_samples samples in it, the prediction of a node is given by(count + dirichlet) / (n_samples + dirichlet * n_classes)The prediction for a sample is computed as the aggregated predictions of all the subtrees along the path leading to the leaf node containing the sample. The aggregation weights are exponential weights with learning rate

stepand losslosswhenuse_aggregationisTrue.This computation is performed exactly thanks to a context tree weighting algorithm. More details can be found in the paper cited in references below.

The final predictions are the average class probabilities predicted by each of the

n_estimatorstrees in the forest.Note

All the parameters of

AMFClassifierbecome read-only after the first call topartial_fitReferences

- Mourtada, S. Gaiffas and E. Scornet, AMF: Aggregated Mondrian Forests for Online Learning, arXiv:1906.10529, 2019

-

__init__(n_classes, n_estimators=10, step=1.0, loss='log', use_aggregation=True, dirichlet=None, split_pure=False, n_jobs=1, n_samples_increment=1024, random_state=None, verbose=False)[source]¶ Instantiates a AMFClassifier instance.

Parameters: - n_classes (

int) – Number of expected classes in the labels. This is required since we don’t know the number of classes in advance in a online setting. - n_estimators (

int, default = 10) – The number of trees in the forest. - step (

float, default = 1) – Step-size for the aggregation weights. Default is 1 for classification with the log-loss, which is usually the best choice. - loss ({"log"}, default = "log") – The loss used for the computation of the aggregation weights. Only “log” is supported for now, namely the log-loss for multi-class classification.

- use_aggregation (

bool, default = True) – Controls if aggregation is used in the trees. It is highly recommended to leave it as True. - dirichlet (

floatorNone, default = None) – Regularization level of the class frequencies used for predictions in each node. Default is dirichlet=0.5 for n_classes=2 and dirichlet=0.01 otherwise. - split_pure (

bool, default = False) – Controls if nodes that contains only sample of the same class should be split (“pure” nodes). Default is False, namely pure nodes are not split, but True can be sometimes better. - n_jobs (

int, default = 1) – Sets the number of threads used to grow the tree in parallel. The default is n_jobs=1, namely single-threaded. Fow now, this parameter has no effect and only a single thread can be used. - n_samples_increment (

int, default = 1024) – Sets the minimum amount of memory which is pre-allocated each time extra memory is required for new samples and new nodes. Decreasing it can slow down training. If you know that eachpartial_fitwill be called with approximately n samples, you can set n_samples_increment = n if n is larger than the default. - random_state (

intorNone, default = None) – Controls the randomness involved in the trees. - verbose (

bool, default = False) – Controls the verbosity when fitting and predicting.

- n_classes (

Methods

__init__(n_classes[, n_estimators, step, …])Instantiates a AMFClassifier instance. get_nodes_df(idx_tree)partial_fit(X, y[, classes])Updates the classifier with the given batch of samples. partial_fit_helper(X, y)Updates the classifier with the given batch of samples. predict_helper(X)Helper method for the predictions of the given features vectors. predict_proba(X)Predicts the class probabilities for the given features vectors. predict_proba_tree(X, tree)Predicts the class probabilities for the given features vectors using a single tree at given index tree.weighted_depth_helper(X)Attributes

dirichletRegularization level of the class frequencies. lossThe loss used for the computation of the aggregation weights. n_classesNumber of expected classes in the labels. n_estimatorsNumber of trees in the forest. n_featuresNumber of features used during training. n_jobsNumber of expected classes in the labels. n_samples_incrementAmount of memory pre-allocated each time extra memory is required. random_stateControls the randomness involved in the trees. split_pureControls if nodes that contains only sample of the same class should be split. stepStep-size for the aggregation weights. use_aggregationControls if aggregation is used in the trees. verboseControls the verbosity when fitting and predicting. -

dirichlet¶ Regularization level of the class frequencies.

Type: floatorNone

-

loss¶ The loss used for the computation of the aggregation weights.

Type: str

-

n_classes¶ Number of expected classes in the labels.

Type: int

-

n_estimators¶ Number of trees in the forest.

Type: int

-

n_features¶ Number of features used during training.

Type: int

-

n_jobs¶ Number of expected classes in the labels.

Type: int

-

n_samples_increment¶ Amount of memory pre-allocated each time extra memory is required.

Type: int

-

partial_fit(X, y, classes=None)[source]¶ Updates the classifier with the given batch of samples.

Parameters: - X (

np.ndarray, shape=(n_samples, n_features)) – Input features matrix. - y (

np.ndarray) – Input labels vector. - classes (

None) – Must not be used, only here for backwards compatibility

Returns: output – Updated instance of

AMFClassifierReturn type: - X (

-

partial_fit_helper(X, y)[source]¶ Updates the classifier with the given batch of samples.

Parameters: - X (

np.ndarray, shape=(n_samples, n_features)) – Input features matrix. - y (

np.ndarray) – Input labels vector. - classes (

None) – Must not be used, only here for backwards compatibility

Returns: output – Updated instance of

AMFClassifierReturn type: - X (

-

predict_helper(X)[source]¶ Helper method for the predictions of the given features vectors. This is used in the

predictandpredict_probamethods ofAMFRegressorandAMFClassifier.Parameters: X ( np.ndarray, shape=(n_samples, n_features)) – Input features matrix to predict for.Returns: output – Returns the predictions for the input features Return type: np.ndarray

-

predict_proba(X)[source]¶ Predicts the class probabilities for the given features vectors.

Parameters: X ( np.ndarray, shape=(n_samples, n_features)) – Input features matrix to predict for.Returns: output – Returns the predicted class probabilities for the input features Return type: np.ndarray, shape=(n_samples, n_classes)

-

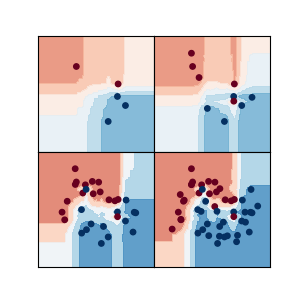

predict_proba_tree(X, tree)[source]¶ Predicts the class probabilities for the given features vectors using a single tree at given index

tree. Should be used only for debugging or visualisation purposes.Parameters: - X (

np.ndarray, shape=(n_samples, n_features)) – Input features matrix to predict for. - tree (

int) – Index of the tree, must be between 0 andn_estimators- 1

Returns: output – Returns the predicted class probabilities for the input features

Return type: np.ndarray, shape=(n_samples, n_classes)- X (

-

random_state¶ Controls the randomness involved in the trees.

Type: intorNone

-

split_pure¶ Controls if nodes that contains only sample of the same class should be split.

Type: bool

-

step¶ Step-size for the aggregation weights.

Type: float

-

use_aggregation¶ Controls if aggregation is used in the trees.

Type: bool

-

verbose¶ Controls the verbosity when fitting and predicting.

Type: bool